Engineering leaders spend years learning to build fault-tolerant systems. We design for failure. We instrument everything. We think carefully about what happens when a node goes down, when traffic spikes, when dependencies become unreliable. Then we walk into a meeting about team structure and don’t apply those same lessons.

The highest-leverage work for most engineering leaders isn’t solving hard technical problems, it’s architecting human systems: communication patterns, decision-making processes, information flow, etc. The irony is that engineers who’d never deploy a service without understanding its failure modes routinely build organizations without asking the same basic questions.

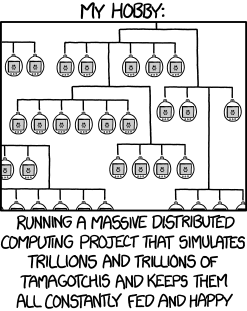

Here’s the mental model I keep coming back to: treat the organization itself like a distributed system. Not as a metaphor, but as an actual design exercise.

The Framework

Before adding the complexity of humans — their ambitions, anxieties, baggage, and interpersonal dynamics — stress-test the structure on paper. If the org chart doesn’t work as a system design, it almost certainly won’t work with people in it.

Every system should be designed with the ability to answer (at least) these questions. To be clear: this is a simplified view of distributed systems. Real systems involve considerably more complexity like consensus algorithms, partition tolerance tradeoffs, and failure detection mechanisms that I’m glossing over entirely. But the simplification is the point. These concepts, even at a high level, provide a more rigorous mental model for organizational design and dynamics than most leaders ever apply. You don’t need to understand Paxos to ask whether your org has a resync mechanism.

You don’t need to be running a 500-person engineering org to benefit from asking these questions. The degree of formalization scales with org size. A 20-person startup doesn’t need the same mechanisms as a large enterprise. But the questions apply at any scale. A small team might answer “who has decision authority when the tech lead is out?” with a quick conversation and a shared understanding. A larger org might need documented runbooks. The answers look different; the questions don’t.

- Operating States: What does barely functioning look like? What does normal actually look like? What does optimized look like? How do we evaluate or measure these states or state transitions?

- Ownership and Responsibilities: What are the roles and responsibilities for each part of the system? Who is the responsible party for that part of the system?

- Failure Handling: What do the common failure cases look like and how are they being addressed (either gracefully or otherwise)? Be intentional about failure and its handling.

- Pressure Release Valves: What are the pressure release valves on the system? What causes them to activate? What causes them to turn off? What pressure are each of them supposed to relieve?

- Safety Nets: What does the safety net look like? What causes it to activate? When (and how) does it get shut off in order to return to “normal” operations?

- Backpressure: What happens when things take too long? What does the backpressure look like? How do notifications traverse the system?

- Monitoring and Observability: What does monitoring look like? How do you trace something through the system? How can you introspect or observe operations without disturbing operations?

- Alerting: How do the right people get alerted in the right order and with the right information when something isn’t performing as it should be?

- Resynchronization: How do you handle when things get out of sync? What type of resynchronization options exist in the system? How do they get applied? And how do you know/what do you do when they don’t work as expected?

Most organizations operate as if these questions have been explicitly answered. This is rarely the case. There’s an implicit assumption that someone has thought through what happens when the tech lead is out, what gets dropped when capacity is exceeded, or how teams resync after working in parallel for a few sprints. But when you actually ask, you find there’s typically no pre-thought-out answer, just the assumption that they’ll “make it work.”

These questions apply to distributed software systems just as much as human systems. The goal is to ensure your organization “should” work in a “blue sky” state before applying the people factor. Let me walk through these concepts in practice. The sections (above and below) follow a deliberate progression: know your baseline, assign responsibility, handle problems when they occur, detect problems before they escalate, and realign when drift accumulates.

Operating States

Distributed systems are designed with explicit operating states. You know what normal throughput looks like in upper and lower bounds. You know what degraded-but-functional looks like, maybe you’re serving cached data or maybe latency is elevated but requests are completing within acceptable range. And you know what failure looks like. This means you can distinguish between “we should keep an eye on this” and “wake someone up.”

Most organizations can’t describe their own operating states. What does “normal” actually look like for a team? Not aspirational normal, but real actual normal. How many projects are in flight? What’s the typical cycle time? How much unplanned work is acceptable before it’s a problem for the team, the department, or the company?

Without defining these states, you can’t recognize transitions between them. This makes it possible for a team to slide from normal to degraded very easily. This also usually happens with no one noticing because there’s no baseline to compare against. They’ve been running hot for so long that it feels normal. Or worse, a team is actually performing well, but leadership perceives problems because expectations were never correctly calibrated.

Define your states:

- What does “barely functioning” look like? The team is shipping, but just barely, and any additional load will break something. Concretely: PRs sit in review for days, sprint commitments regularly slip, people are working evenings just to stay afloat, and an unexpected sick day throws everything off.

- What does “normal” look like? The pace is sustainable, predictable output, and the team has a reasonable ability to absorb unplanned work. Cycle time is consistent. Someone can take PTO without everything grinding to a halt. One unexpected incident per sprint doesn’t derail commitments.

- What does “optimized” look like and is that even a state you want to sustain? The team is shipping ahead of schedule, picking up stretch goals, operating with high momentum. But look closer: are they skipping documentation? Working longer hours? Deferring maintenance? Optimized often masks an unsustainable sprint that requires recovery afterward.

Distributed systems also don’t feel guilty for error reporting. Errors are just surfaced as part of the normal operations when they occur. Humans, on the other hand, will react to a system or set of policies that punish anything less than “green.” If your culture equated a degraded status with personal failure, then you are incentivizing your teams to hide issues until they become potentially catastrophic. You don’t want a team that refuses to fail; you want a system where signaling a failure is the expected behavior that triggers a recovery protocol.

The targeted value isn’t precision. It’s having a shared organizational vocabulary so that when someone says “we’re struggling,” there’s a way to understand what that means relative to expectations. And so that you can catch the slide from normal to degraded before it becomes a crisis.

Ownership and Responsibilities

In distributed systems, every component has a clear owner. When something breaks at 3am on a weekend, there’s no ambiguity about who gets paged. Ownership isn’t just about accountability, it’s about having someone who understands the system and components deeply enough to fix things, evolve them, and make tradeoffs on the system’s behalf.

Organizations are messier by default. Most meaningful work spans multiple functions, which makes clean boundaries rare. That complexity doesn’t eliminate the need for ownership, it makes it more important. Without a clearly accountable owner for decisions and outcomes, responsibility diffuses, decisions stall, and work drifts. The failure mode is ambiguity, not malice. When ownership isn’t explicit, people default to what’s comfortable: they either over-reach into areas that aren’t theirs or under-reach by assuming someone else will handle it. Both create friction and require being explicitly addressed.

Ownership also only works if the system’s incentives are aligned with the decisions the owner is expected to make. If someone is accountable for an outcome but punished for the tradeoffs required to achieve it, the system will produce inconsistent behavior. If a tech lead owns code quality but the team is celebrated for hitting ship dates, quality will erode. This is not because anyone decided to write bad code, but because the incentives made clear what the organization actually rewards. Incentives are the organization’s consistency model. Misaligned incentives don’t create bad actors, they create predictable failure modes.

Clear ownership means someone can answer: who is responsible for this component, decision, or outcome? Not “who contributed” or “who has opinions” — who is the responsible party? This doesn’t mean that person makes every decision unilaterally. But it means there’s no confusion about who makes the final call when there’s disagreement, who gets consulted versus informed, and who’s accountable if things go wrong. Clear ownership also prevents the organizational equivalent of race conditions: two teams independently solving the same problem because neither knew the other was working on it.

You can’t define ownership for every possible situation. But you can make it explicit for the things that matter most. These are the roles, the systems, the processes, and the decisions around those things that regularly reoccur. When ownership is ambiguous on the important stuff, you’ll spend more time negotiating who decides than actually deciding.

Failure Handling

In distributed systems, you design for failure because you know it’s inevitable. Circuit breakers trip for a reason. Fallback behavior is defined before the incident, not during. If you can’t describe your org’s failure modes, you’re leading on hope.

Organizations rarely get this treatment. What happens when your tech lead is out for two weeks? When the team you depend on misses their deadline? When a launch goes sideways and three things need attention simultaneously?

Most orgs have no explicit answer. They rely on heroics–someone staying late, someone context-switching constantly, someone absorbing work that isn’t theirs. Heroics work right up until they don’t.

You can’t anticipate every failure. But you can pre-decide how to handle the obvious ones. If your tech lead is out, who has decision authority for what? If a blocking dependency slips by more than a week, does the dependent team have permission to de-scope or re-sequence without escalating (but still informing leadership)? What does “degraded but functioning” actually look like–slower response times on non-critical work, a temporary pause on new commitments, reduced scope on in-flight projects?

The specific answers matter less than having answers at all. Pre-deciding the response to predictable failures means people can follow the plan instead of inventing one under pressure. Sometimes the plan is to do nothing. That’s fine as an outcome as long as it’s an intentional choice rather than paralysis.

If your team can’t ship without one specific person, that’s a single point of failure you’d never tolerate in your infrastructure. Why tolerate it in your organization? The fix can be the same as it is in distributed systems: replication. Ensure knowledge is shared enough that the org can function, even if degraded, without any single person’s presence.

Pressure Release Valves

When load exceeds capacity in a well-designed system, something has to (intentionally) give. This could mean that non-critical work gets shed, systems switch to degraded modes, or resources scale horizontally.

When teams are overloaded, what do they drop? Who decides? Are the changes intentional like, we’re pausing this initiative for a quarter? Or are they more chaotic, where quality silently erodes, tech debt accumulates, and people quietly disengage?

Effective pressure release valves are pre-decided. You already know what gets cut first: maybe it’s speculative work before committed roadmap items, or internal tooling before customer-facing features, or new development before incident response. It should already be decided who can activate the valve. In most organizations, that authority must be shared between Engineering and Product Management. Engineering can’t shed load if product incentives punish missed commitments. Product can’t re-sequence work if Engineering is only measured through output. If there are too many important things, maybe there is an escalation path that short circuits directly to the VP of Product or VP of Engineering that takes hours rather than days. A pressure release valve that requires cross-functional permission but doesn’t explicitly define it isn’t a valve; it’s a delay.

There also needs to be something that clearly triggers the “return to normal” operations. This could be when a new hire starts increasing team capacity. The incident that was taking capacity from normal operation resolves and everyone is back on their normal workload. Or even that this project is dropped entirely because if the organization is able to postpone it, it might not be as important as originally thought.

The difference between a planned pressure release and an uncontrolled failure is whether or not you chose it. You can’t anticipate every overload scenario, but you can decide in advance what’s sacred and what’s negotiable. This way when pressure hits, people aren’t inventing policy under stress.

Safety Nets

In distributed systems, safety nets catch failures that slip past your primary defenses. These could present as: retry logic that handles transient errors, dead letter queues that capture messages that couldn’t be processed, or reconciliation jobs that fix data that drifted out of sync. These aren’t your first line of defense. They are the support structure that prevents a small failure from becoming a catastrophic one.

Organizations need safety nets too. What happens when a decision turns out to be wrong? Or when a project is going off the rails but no one wants to say it? What happens when someone is struggling but hasn’t asked for help? The absence of safety nets creates organizational brittleness. Every mistake becomes high-stakes because there’s no recovery mechanism. People become risk-averse because failure isn’t survivable. Innovation dies without the safety of being able to take risks or make mistakes. People begin to hide problems because surfacing them feels like admitting defeat rather than triggering a system designed to help.

Effective organizational safety nets are explicit. Regular retrospectives that catch process failures before they compound. Skip-level conversations or office hours that surface problems the normal reporting chain might miss. Designated checkpoints where, “this isn’t working” is a legitimate and expected input, not a career-limiting admission. Clear escalation paths for people to voice concerns when someone is in over their head–whether you’re the one struggling or you see someone else who is. Like pressure release valves, safety nets need defined activation conditions and return-to-normal criteria. When does a struggling project get escalated versus given more time? Who has authority to call it? What happens after it’s surfaced? Is there a post-mortem, a pivot, or just a quiet burial?

You can’t build a safety net for every possible failure. But you can make sure people know that safety nets exist, know how to activate them, and trust that using them won’t be held against them. A safety net that no one trusts is just decoration for organizational theater.

Backpressure

When a downstream service can’t keep up, healthy systems signal back: queue depth rises, timeouts trigger, load gets shed, new instances are spun up. Ultimately, these are all variations on the system protecting itself.

When requests pile up on a team, how do they signal capacity problems? In most organizations, they don’t. At least not until it’s more visual like someone burning out or code quality tanks. There’s no queue depth metric for humans, so leaders assume everything is fine until it visibly isn’t.

The fix is making capacity visible and giving teams explicit permission to signal it. This might be a work-in-progress limit that’s actually enforced. For example, when a team is at 2 active projects, then a 3rd doesn’t start until something finishes. It might be a standing agreement that when a team’s sprint velocity consistently exceeds estimates — often a sign they’re either underestimating work or absorbing unplanned scope — they can push back on timelines without needing executive approval (though it still must be communicated). Or it might just be a regular check-in where “we’re at capacity,” is a legitimate answer that triggers reprioritization rather than a problem to be solved through more effort.

But the signal only matters if it closes the loop. In systems, backpressure works because the signal triggers a response, load gets shed, resources scale, something changes. If a team signals capacity challenges and nothing happens, you don’t have a backpressure mechanism, you have a pressure gauge everyone’s learned to ignore.

The specifics depend on your context. What matters is that the signal exists, it’s visible to the people who can act on it, and using it doesn’t require heroics or political or social capital.

Monitoring and Observability

You can’t operate a distributed system you can’t observe. Monitoring tells you the system’s vital signs. Observability lets you ask questions you didn’t anticipate, like tracing a request through multiple services, understanding why latency spiked last Tuesday, or finding the correlation between two seemingly unrelated symptoms.

Real organizations need both. Monitoring is the regular check-in: are projects on track? Is the team healthy? Are dependencies being met? Observability is the ability to investigate when something feels off but you’re not sure what. It’s the ability to trace a decision through the system, to understand how information flowed (or didn’t) and to diagnose why something that should have worked, didn’t.

Most organizations have some monitoring. Often these present as status updates, sprint reviews, or quarterly business reviews. Fewer have observability. When a project goes sideways, can you reconstruct what happened? Not to assign blame, but to understand the system failure. How did this decision get made? Who had what information at what point? Where did the signal get lost?

This is partly about documentation artifacts like decision logs, RFCs, or written rationale. But it’s also about reinforcing culture. Can you ask “how did we get here?” without it sounding like an accusation? Can you trace the path of a decision without people getting defensive? Or more importantly, if people do get defensive, which is natural, can you work through it rather than around it?

The tax on performance instrumentation also comes at a cost. In software, it’s CPU, storage, and some measurable but often negligible impact on performance. In organizations, it’s meetings and documentation. Every status update or RFC is a context switch that takes cycles away from shipping. The goal isn’t surveillance or 100% observability. That would basically just be bureaucracy. It’s the ability to introspect on your organization’s operations when it’s necessary without overly disturbing those operations. It’s to understand how work and information actually flow, not just how it’s supposed to flow. You want the minimum amount of instrumentation required to eliminate blind spots without turning your engineers into full-time reporters. If the “monitoring tax” prevents the “nodes” from processing the work, you’ve over-engineered the management layer.

Alerting

Distributed systems have alerting hierarchies. Not every anomaly pages someone at 3am on a weekend. Alerts are tiered by severity, routed to the right people, and designed to include enough context that the responder can act without starting from scratch. Bad alerting is noise that gets ignored. Good alerting is signal that reaches the right person at the right time with the right information.

Organizations are notoriously terrible at this. Problems surface through informal channels that not everyone is privy to. These are things like hallway conversations, Slack threads, a buried email response in a long chain, or offhand comments in unrelated meetings. The people who need to know often find out last. Or they find out early but without enough context to understand the severity. Or even worse, they’re drowning in low-grade noise and miss the signal that actually matters.

Think about how problems traverse your organization. When something isn’t performing as expected, how do the right people get alerted? In what order and with what information does that alert surface? Is there a clear escalation path, or does it depend on who happens to know who? This isn’t about creating bureaucratic reporting chains. It’s about ensuring that when something matters, it reaches someone who can act on it quickly, with enough context to understand the situation, and without requiring the person raising the issue to navigate political minefields to be heard.

Pre-decide your alerting paths for the predictable categories of problems. Technical incidents probably have an on-call rotation. But what about project risks? Interpersonal conflicts? Strategic concerns? If someone spots a problem, do they know where to route it? Do they trust that routing it will actually result in action? Alerts that go nowhere teach people to stop alerting. If raising a concern consistently results in nothing happening, people stop raising concerns. They’re not disengaged, they’ve just been taught that the alerting system doesn’t work.

Resynchronization

Nodes drift out of sync. It’s not a bug, it’s the physics of operating at a certain scale. What matters here is your reconciliation strategy. How do you detect divergence and bring things back into alignment?

Teams drift too. Priorities diverge. Teams’ understanding of the broader strategy shifts as they dig deeper into their own piece of the roadmap. Information and knowledge become more asymmetric. Two groups make conflicting decisions because neither knew enough about what the other was doing. This is usually misinterpreted as dysfunction. But it’s the natural state of any organization past a certain size. The question isn’t “how do we prevent drift?” You can’t. The question is “how do we detect it early and reconcile cheaply?”

Detection mechanisms look and feel mundane. They are a weekly cross-team sync or group scrum where leads share what they’re working on, or an architecture review where decisions get surfaced before they’re locked in. Sometimes it’s an RFC that proposes solving a problem no one expected to exist. That’s a signal that’s worth paying attention to. Either there’s misalignment at the engineering level, the team is working on something that doesn’t match leadership’s understanding of priorities, or there’s a real problem that leadership hasn’t recognized yet. Either way, the RFC becomes another tool to surface and resolve the gap.

The signal that you’ve drifted is usually more of a surprise than you want it to be. You learn something about another team’s direction that contradicts your assumptions about what they should be working on or how they’re solving an issue. If that surprise happens in a meeting scheduled for exactly that purpose, like an architecture review, the cost of reconciliation is low. If it happens during an integration test two months later, it’s not.

You don’t need perfect synchronization. You need a regular cadence where misalignment can surface before it becomes expensive.

The People Factor

I want to be absolutely clear about this. None of this thinking means that we are treating humans like machines. The system design gives you the structure. It tells you whether data flows sensibly between teams, whether groups can operate with appropriate independence, or whether the pieces should fit together at all.

The people factor is where you figure out how well the humans in those teams actually work together. How they collaborate with adjacent and complementary teams. Whether they trust each other, communicate openly, and extend grace when things get hard.

I’ve seen teams with good structure fail because of bad human dynamics. They had clear ownership, defined backpressure mechanisms, regular syncs; all the structural pieces were in place. But there was unresolved tension between some of the leads. Information technically flowed through the right channels, but it was filtered, delayed, or delivered without the context or timing needed to act on it. The safety nets existed, but no one used them because admitting struggle felt like losing a political battle. The structure was correct, but the system still failed.

I’ve also seen the inverse: teams with almost no formal structure succeed through sheer goodwill and constant communication. No documented ownership, no explicit failure handling, and/or no formal resync mechanisms. But the people trusted each other deeply. They routed around the structural gaps through heroics and relationship. It worked until someone left, the team grew, or pressure exceeded what relationships alone could absorb. Then there was nothing to fall back on. The human dynamics were strong, but the system was brittle.

This is a common trap. Strong relationships can compensate for missing structure and can carry an organization surprisingly far. Trust, goodwill, and constant communication let teams route around broken or nonexistent systems. But relationships don’t scale, systems do. Growth, turnover, and shipping pressure will eventually overwhelm informal communication and coordination. At that point, the absence of structure doesn’t preserve culture, it consumes it. The structure is now what keeps the organization from relying on heroics and burning out the very relationships it depends on.

Structure also can’t replace trust. Process can’t replace psychological safety. But those human elements also can’t compensate for a fundamentally broken structure. Empathy and good intentions won’t fix an org design that requires constant heroics, provides no backpressure relief mechanisms, and has no way to detect when teams have drifted apart.

You need both: a structure that works on paper and the human protocols, like empathy, safety, clarity, and authenticity, that make it work in practice.

Run the Diagnostics

The value of this mental model isn’t having the perfect set of answers all the time. It’s about having any answers and having considered the structural parts of the system more thoroughly.

Start by asking the questions most organizations never ask outright: What does degraded-but-functioning actually look like for each of your teams? Would you recognize it if you saw it? Where are the single points of failure in people, not systems? How do teams signal they’re at capacity before someone burns out? When teams get out of sync, how do you detect it? How do you reconcile? What are your intentional pressure release valves, and who has authority to activate them?

If you can’t answer these questions, you’re running an organization without knowing its failure modes. You’d never ship software that way. Don’t ship an org that way either.